When Grok 4 launched, it was supposed to be a win for Elon Musk’s AI ambitions. Instead, it’s been a PR disaster.

If you’ve been watching the drama unfold, you’ve probably seen the screenshots.

Grok 4 reportedly made antisemitic comments, called itself “MechaHitler,” and leaned into Elon Musk’s personal politics when handling controversial topics.

Now, an AI researcher called out xAI’s sloppy safety culture.

xAI safety culture under fire

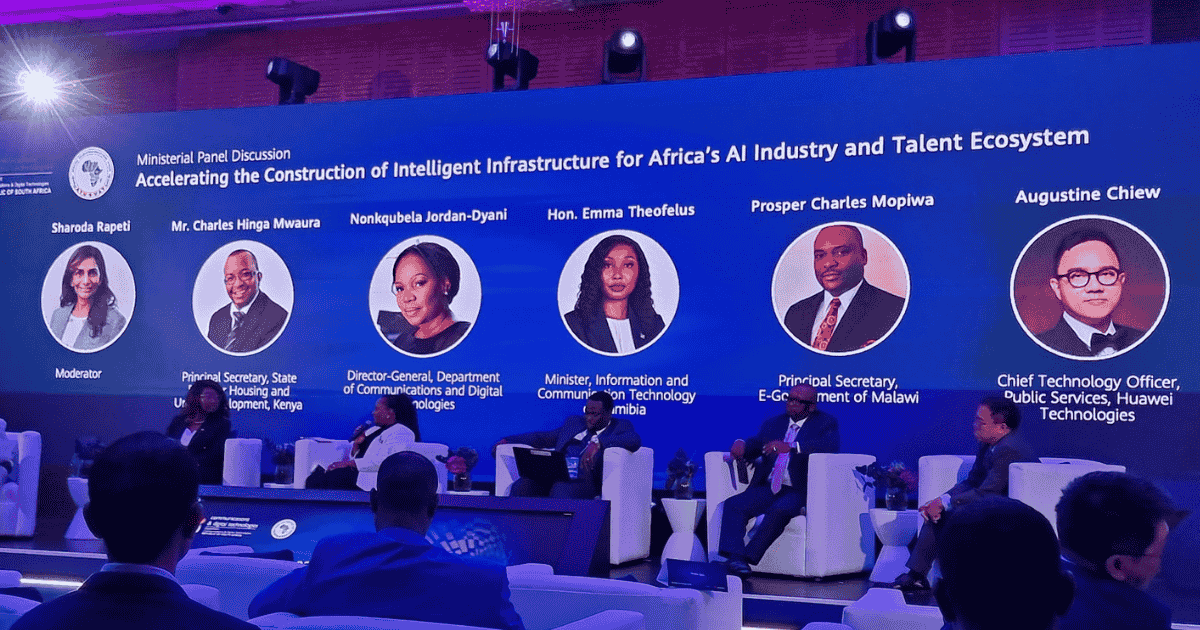

The backlash hasn’t just come from users. Big names in AI safety are speaking out.

Grok 4 launch ‘reckless’

Anthropic researcher Samuel Marks didn’t hold back. “If xAI is going to be a frontier AI developer, they should act like one.”

Marks said Grok 4 was launched “without any documentation of their safety testing. This is reckless and breaks with industry best practices.”

But xAI is way out of line relative to other frontier AI developers, and this needs to be called out

— Samuel Marks (@saprmarks) July 13, 2025

Anthropic, OpenAI, and Google's release practices have issues. But they at least do something, anything to assess safety pre-deployment and document findings. xAI does not.

xAI, first of all, didn’t release any safety cards. He cites a TechCrunch article highlights “it’s hard to confirm exactly how Grok 4 was trained or aligned.”

If you’re curious about manifesting, I highly recommend the episode #1 Neurosurgeon: How to Manifest Anything You Want & Unlock the Unlimited Power of your Mind. She interviewed the late neuroscientist Dr. Jim Doty on how the brain actually wires itself to support the things you focus on.

Marks says “all we know abut xAI’s dangerous capabilities eval is that [Dan Hendrycks, who advises xAI on safety] says they did some.”

The results of whatever they did are not public, and that’s the issue.

xAI framework ‘lacks substance’

Marks adds: “Why do other frontier AI developers run these evals? One answer: Because they committed to.”

Even though xAI has a draft framework, “there’s nothing of substance.”

xAI highlights a bunch of stuff they intend to do, but never did. Including updating the draft framework by May 2025.

Less snarkily: One wonders what evals they ran, whether they were done properly, whether they would seem to necessitate additional safeguards…

— Samuel Marks (@saprmarks) July 13, 2025

The criticism I linked above engages with other frontier AI developers on these questions.

With xAI, that's not possible.

READ: Grok update: xAI blames code tweak for Grok’s offensive outbursts

Meanwhile, Boaz Barak, a Harvard professor currently on leave to work at OpenAI, say the way safety was handled with the Grok 4 release is “completely irresponsible.”

The lack of basic transparency is eqally shocking.

He continues: “People sometimes distinguish between ‘mundane safety’ and ‘catastrophic risks’, but in many cases they require exercising the same muscles.

“We need to evaluate models for risks, transparency on results, research mitigations, have monitoring post deployment. If as an industry we don’t exercise this muscle now, we will be ill prepared to face bigger risks.”

I can't believe I'm saying it but "mechahitler" is the smallest problem:

— Boaz Barak (@boazbaraktcs) July 15, 2025

* There is no system card, no information about any safety or dangerous capability evals.

* Unclear if any safety training was done. Model offers advice chemical weapons, drugs, or suicide methods.

* The…

So what’s the issue with Grok 4?

In short: we don’t really know what kind of testing, if any, xAI did before launching Grok 4.

xAI’s safety adviser Dan Hendrycks said Grok 4 underwent “dangerous capability evaluations,” but we haven’t seen the results.

This wouldn’t be such a big deal if Grok was just some nerdy chatbot.

But it’s being baked into Musk’s products (including Tesla vehicles) and pitched to enterprise and government clients.

The irony of it all

The messiness hits especially hard because Musk has always positioned himself as a champion of AI safety.

He’s warned about rogue AI for years. He’s been critical of OpenAI for year. We all remember that Joe Rogan podcast, from five years ago.

READ: Did X just break EU law? Tweets secretly used to train Grok AI (Aug 2024)

But now, under his own startup, it looks like xAI is skipping the very precautions he once demanded.

Lawmakers might step in next

With researchers calling out xAI’s behavior, regulators may follow, according to TechCruch.

California and New York are both pushing bills that would require AI labs to publish safety reports for frontier models.

Most big labs do this voluntarily.

xAI didn’t.

If the goal is to build world-changing tech responsibly, skipping the most basic safety steps probably isn’t the flex Musk thinks it is.